If my penchant for removing incredibly specific, minor inconveniences from my life with overly-complicated, home-grown automation tools wasn't yet fully evident, get ready to be dazzled by the lengths to which I go with this one.

It's winter time here in terrible Phoenix, Arizona, and that means temperatures with highs in the high-70s to low-80s, and lows in the mid-40s. Translated: it's a bit too warm to turn on the heater, and a bit too cool to necessitate air-conditioning.

As a result, over the course of a day, the temperature inside our home ranges from 70F in the morning, to upwards of 78F by late afternoon. Since I work at home and I hate feeling hot , I like to keep the front and back doors to the house open in the mornings and evenings, in an effort to cool the house enough to keep the mid-day temperatures inside below 75F.

Generally, that means keeping the doors open in the morning until the temperature outside rises above 70F, and keeping them closed until the temperature drops back down below 70F in the evening.

"I don't see the problem," you say. "Just shout at Siri or your Echo Dot and ask the temperature periodically. Or just look down at your Apple Watch. Or literally any number of other options at your disposal."

Yes, I totally hear you.

Now, take a deep breath because it's going to get weird.

Most weather services use a weather station downtown or at the airport of your city. In my case, those weather stations are 25 miles away and on the other side of a very large mountain. The result being that they're almost always wrong for my neighborhood by about 3 or 4 degrees.

So, I primarily monitor the temperature with a Weather Underground station located less than a half-mile from my home. I keep the WU widget in my Today view on my phone and periodically swipe over, scroll down, and wait for it to update. I love a lot of things about Weather Underground. The speed at which its app refreshes is definitely not one of them. In fact, I usually end up launching the app from the widget in order to make sure it's actually refreshed and not showing me old data. And don't even get me started on its Apple Watch complication. It's tiny and ugly and I hate it.

Are you still reading? Okay, good.

Unrelated to the weather, I've recently begun playing around with Pushover on iOS to send myself custom push notifications based on whatever criteria I deem worthy of a notification. It's super simple to set up and use, has a ton of flexibility, and does exactly what you'd expect it to do. I love it.

I've heard of people using it to alert themselves when a long video render has completed so they can go about their day without needlessly checking the progress bar on their computer. A very cool use case that I will definitely investigate. But, on this morning, I thought to myself, how cool would it be if I could set up Pushover to send me a notification when the temperature at my local WU station goes above / drops below 70F?

To the WU

In addition to being a very cool service, Weather Underground has a nice developer API. You can sign up for a free developer account that will let you to request Current Conditions up to 500 times per day. That's more than enough for what I want to do.

With a simple call of:

curl http://api.wunderground.com/api/DEVELOPERID/conditions/q/AZ/pws:EXAMPLESTATION.json

I get a return like this:

{

"response": {

"version":"0.1",

"termsofService":"http://www.wunderground.com/weather/api/d/terms.html",

"features": {

"conditions": 1

}

}

, "current_observation": {

"image": {

"url":"http://icons.wxug.com/graphics/wu2/logo_130x80.png",

"title":"Weather Underground",

"link":"http://www.wunderground.com"

},

"display_location": {

"full":"Phoenix, AZ",

"city":"Phoenix",

"state":"AZ",

"state_name":"Arizona",

"country":"US",

"country_iso3166":"US",

"zip":"XXXXX",

"magic":"1",

"wmo":"99999",

"latitude":"33.XXXXXX",

"longitude":"-112.XXXXXX",

"elevation":"373.1"

},

"observation_location": {

"full":"Example Station, Phoenix, Arizona",

"city":"Example Station, Phoenix",

"state":"Arizona",

"country":"US",

"country_iso3166":"US",

"latitude":"33.XXXXXXX",

"longitude":"-112.XXXXXX",

"elevation":"1214 ft"

},

"estimated": {

},

"station_id":"EXAMPLESTATION",

"observation_time":"Last Updated on February 6, 11:46 AM MST",

"observation_time_rfc822":"Tue, 06 Feb 2018 11:46:53 -0700",

"observation_epoch":"1517942813",

"local_time_rfc822":"Tue, 06 Feb 2018 11:47:00 -0700",

"local_epoch":"1517942820",

"local_tz_short":"MST",

"local_tz_long":"America/Phoenix",

"local_tz_offset":"-0700",

"weather":"Clear",

"temperature_string":"72.4 F (22.4 C)",

"temp_f":72.4,

"temp_c":22.4,

"relative_humidity":"21%",

"wind_string":"From the SE at 1.0 MPH Gusting to 3.0 MPH",

"wind_dir":"SE",

"wind_degrees":139,

"wind_mph":1.0,

"wind_gust_mph":"3.0",

"wind_kph":1.6,

"wind_gust_kph":"4.8",

"pressure_mb":"1014",

"pressure_in":"29.95",

"pressure_trend":"-",

"dewpoint_string":"30 F (-1 C)",

"dewpoint_f":30,

"dewpoint_c":-1,

"heat_index_string":"NA",

"heat_index_f":"NA",

"heat_index_c":"NA",

"windchill_string":"NA",

"windchill_f":"NA",

"windchill_c":"NA",

"feelslike_string":"72.4 F (22.4 C)",

"feelslike_f":"72.4",

"feelslike_c":"22.4",

"visibility_mi":"10.0",

"visibility_km":"16.1",

"solarradiation":"--",

"UV":"4","precip_1hr_string":"0.00 in ( 0 mm)",

"precip_1hr_in":"0.00",

"precip_1hr_metric":" 0",

"precip_today_string":"0.00 in (0 mm)",

"precip_today_in":"0.00",

"precip_today_metric":"0",

"icon":"clear",

"icon_url":"http://icons.wxug.com/i/c/k/clear.gif",

"forecast_url":"http://www.wunderground.com/US/AZ/Phoenix.html",

"history_url":"http://www.wunderground.com/weatherstation/WXDailyHistory.asp?ID=EXAMPLESTATION",

"ob_url":"http://www.wunderground.com/cgi-bin/findweather/getForecast?query=33.XXXXXX,-112.XXXXXX",

"nowcast":""

}

}

It's a lot, I know. But it includes everything we would ever want to know about our hyper-local weather station. Including the current temperature, after the value labled temp_f. With a quick REGEX, we can search through this response and pull out just the current temperature in Fahrenheit.

That REGEX looks like this:

(?<="temp_f":)(.*?)(?=,)

The Push

Once we've determined our current temperature is above 70.0F, we'll send ourselves a notification with Pushover by running a command that looks like this:

curl -s \

--form-string "token=MY_TOKEN" \

--form-string "user=MY_USERID" \

--form-string "message=It's above 70F outisde." \

--form-string "title=Close the Doors" \

https://api.pushover.net/1/messages.json

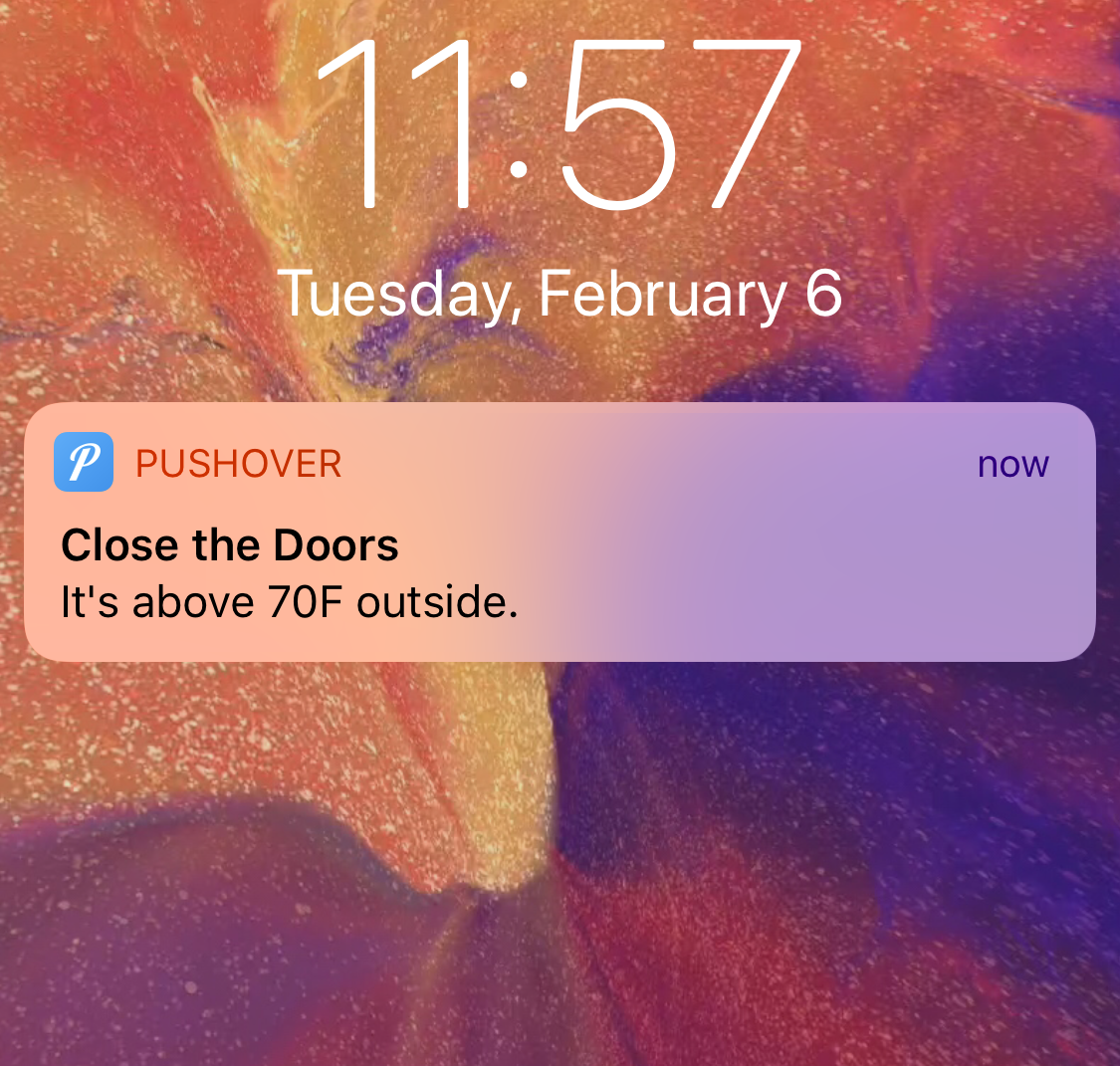

Which pops up on my iPhone and Apple Watch looking like this: